International Nursing Association for Clinical and Simulation Learning (INACSL)

The International Nursing Association for Clinical and Simulation Learning (INACSL) is an association dedicated to advancing the science of healthcare simulation. With over 1,800 members worldwide, the organization’s mission is to be the global leader in the art and science of healthcare simulation through excellence in nursing education, practice, and research. INACSL’s goal is also to advance the science of nursing simulation by providing professional development, networking resources, and leadership in defining healthcare simulation standards of best practice.

INACSL membership provides the education, resources, and tools that best address current challenges and help support learner, educator, and professional goals related to the learning of healthcare simulation’s latest developments. This is while ensuring that these individuals are enabled to provide the most comprehensive education and training for high-quality patient care.

Whether someone is new to healthcare simulation and is looking to understand the fundamentals or are experienced and seeking the latest updates and research, INACSL can provide them with the support they need. Membership in INACSL is based on connection, engagement, support, and inspiration.

Sponsored Content:

Membership in INACSL is contingent upon the following standards of conduct:

- Respecting and valuing the knowledge, perspectives, contributions, and areas of competence of fellow members, colleagues, trainees, and professionals

- Sharing knowledge and providing mentorship and guidance for the professional development of other members, colleagues, trainees, and professionals

- Taking responsibility and credit only for work they have actually performed and to which they have contributed

- Appropriately acknowledging the work and contributions of others

- Maintaining appropriate boundaries and a professional environment that is free from bias or discrimination

INACSL History

In 1976, a group of nursing educators from around the U.S. came together at the Health Education Media Association (HEMA) conference in New Orleans and began a dialogue. Among that first group was what would one day grow to become INACSL. This included Charlene Clark, Kathleen Mikan, Kay Hodson-Carlton, and Joanne Crow.

After the initial meeting, interested persons met at the Biennial North American Learning Resource Centers (LRC) Conference and the National Conference on Nursing Skills Lab on an annual basis. In 1999, the group informally began discussing the need to network throughout the year rather than limiting networking to conference gatherings.

Sponsored Content:

Interested leaders met again in 2001 and decided to create a formal organization. In April of 2002, the organization was named the International Nursing Association for Clinical Simulation and Learning (INACSL), and on January 3, 2003, INACSL was incorporated in the state of Texas.

INACSL Conference

Each year, the INACSL Conference serves as a leading forum for healthcare simulation professionals, researchers, and vendors to provide the ideal environment to gain and disseminate current, state-of-the-art knowledge in the areas of skills, simulation operations, and applications in an evidenced-based venue. Through workshops, educational sessions, poster presentations, exhibitors, and Continuing Nursing Education (CNE) credits, INACSL offers valuable experiences, concepts, and networking opportunities.

INACSL Healthcare Simulation Standards of Best Practice

INACSL’s major priority and contribution to medical simulation are the carefully developed standards for simulation practice. Since originally announced in 2011, the INACSL Standards of Best Practice have guided the integration, use, and advancement of simulation-based experiences within academia, clinical practice, and research. They are designed to advance the science of clinical simulation, share best practices, and provide evidence-based guidelines for the practice and development of a comprehensive standard of practice.

Further, the Healthcare Simulation Standards of Best Practice provide a detailed process for evaluating and improving simulation operating procedures and delivery methods that every medical simulation team will benefit from. Adoption of the Healthcare Simulation Standards demonstrates a commitment to quality and implementation of rigorous evidence-based practices in healthcare education to improve patient care.

The Healthcare Simulation Standards of Best Practice include:

- Professional Development: Initial and ongoing professional development supports the simulationist across their career. As the practice of simulation-based education grows, professional development allows the simulationist to stay current with new knowledge, provide high-quality simulation experiences, and meet the educational needs of the learners.

- Prebriefing: Prebriefing is a process that involves preparation and briefing. Prebriefing ensures that simulation learners are prepared for the educational content and are aware of the ground rules for the simulation-based experience.

- Simulation Design: Simulation-based experiences are purposefully designed to meet identified objectives and optimize the achievement of expected outcomes.

- Facilitation: Facilitation methods are varied and the use of a specific method is dependent on the learning needs of the learner and the expected outcomes. Facilitation provides the structure and process to guide participants to work cohesively, comprehend learning objectives, and develop a plan to achieve desired outcomes.

- The Debriefing Process: All simulation-based educational (SBE) activities must include a planned debriefing process. This debriefing process may include any of the activities of feedback, debriefing, and/or guided reflection.

- Operations: All simulation-based education programs require systems and infrastructure to support and maintain operations.

- Outcomes & Objectives: All simulation-based experiences (SBE) originate with the development of measurable objectives designed to achieve expected behaviors and outcomes.

- Professional Integrity: Professional integrity refers to the ethical behaviors and conduct that are expected of all involved throughout simulation-based experiences (SBE); facilitators, learners, and participants.

- Simulation-Enhanced-IPE: Simulation-enhanced interprofessional education (Sim- IPE) enables learners from different healthcare professions to engage in a simulation-based experience to achieve linked or shared objectives and outcomes.

- Evaluation of Learning and Performance: Simulation-based experiences may include evaluation of the learner.

INACSL Simulation Education Program” (ISEP)

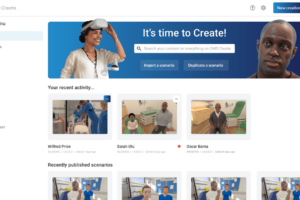

To bring just-in-time education and best practices to participants, INACSL has developed the “INACSL Simulation Education Program” (ISEP). This particular program aligns with the vision, mission, and core values of INACSL in promoting evidence-based strategies across healthcare simulation. Developed and facilitated by experts in the field, ISEP provides a pathway for novice and intermediate simulation educators, practitioners, and directors to learn and apply these evidence-based strategies in simulation.

According to INACSL, advanced practitioners enrolled in the program benefit from the refinement of skills and best practices in healthcare simulation-based education. A comprehensive, online program, ISEP integrates concepts of clinical simulation and instructional design methodologies into practice with interactive, activity-based, online projects. The program consists of 12 courses, and each incorporates participant discussion boards and video sessions with facilitators related to specific course projects and assignments. Additionally, some courses require participants to work together virtually to complete group projects.

INACSL Endorsement

INACSL established a Healthcare Simulation Standards Endorsement in 2022 to recognize healthcare institutions and practices that have demonstrated excellence in applying all four clinical simulation standards from the Healthcare Simulation Standards of Best Practice (HSSOBP) in their educational simulation programs. The four standards include Prebriefing: Preparation and Briefing, Facilitation, Professional Integrity, and Debriefing. This HealthySimulation.com article explains the purpose of the healthcare simulation endorsement and shares the intended goals and benefits of this program.

Ultimately, to receive this endorsement, organizations must demonstrate a commitment to pursuing and sustaining excellence in the four standards mentioned above, designated as the “Core Four” Health Simulation Standards. According to INACSL, these Healthcare Simulation Standards are meant to guide the “integration, use, and advancement of simulation-based experiences within academia, clinical practice, and research.”

The endorsement opportunity is meant to create positive changes that improve healthcare simulation based on the application of evidence-based practices and research. The organization also aims to use the endorsement to promote ongoing performance evaluation and improvements within simulation programs and improve healthcare education and patient safety through the promotion and practice of high-quality simulation. Learn more about INACSL on the organization’s website or by reading the healthcare simulation education articles below.

Clinical Simulation in Nursing Journal

Clinical Simulation in Nursing is an international, peer-reviewed journal published online monthly. Clinical Simulation in Nursing is the official journal of INACSL and reflects the mission of INACSL to advance the science of healthcare simulation. Clinical Simulation in Nursing has a 2020 Impact Factor of 2.391, ranking favorably in the Nursing category. All articles are listed in the Science Citation Index Expanded, Journal Citation Reports/Science Edition, Social Science Citation Index, Journal Citation Reports/Social Sciences Edition, and Current Contents/ Social and Behavioral Health Sciences.

INACSL Simulation Education Latest News

Global Medical Simulation News Update April 2024

NLN Simulation Innovation Resource Center (SIRC) Homegrown Solutions

Healthcare Simulation Research Update November 2023

Healthcare Simulation Research Update September 2023

Research Update: Clinical Simulation in Nursing July 2023

Latest Nursing Simulation Research from Clinical Simulation in Nursing Journal

Research Update: Clinical Simulation in Nursing May – June 2023

INACSL’s Nursing Simulation Conference Celebrates 20 Years

Women in Leadership Virtual Event Empowers Clinical Simulation Community

Healthcare Simulation Sustainability: Reuse, Refill, Recycle, Request

Uses of Simulation Educator Needs Assessment Tool (SENAT)

6 Additional Medical Simulation Keywords You Need to Know About

Sponsored Content: