Clinical Learning Environment Comparison Survey (CLECS)

As part of the Evaluating Healthcare Simulation tools, the Clinical Learning Environment Comparison Surveys (CLECS and CLECS 2.0 for Virtual) was created by Kim Leighton, PhD, RN, CHSOS, CHSE-A, ANEF, FSSH, FAAN. CLECS was developed by Leighton (2015) to evaluate how well learning needs are met in the traditional and simulation undergraduate clinical environments. The CLECS was used in the landmark NCSBN National Simulation Study (Hayden et al., 2014). CLECS was modified by Leighton et al. (2021) for the CLECS 2.0. CLECS 2.0 was to respond to the changes in simulation delivery during the COVID-19 pandemic for students’ perception of how well the learning needs were met in three environments: traditional clinical environment, face-to-face simulated clinical environment, and screen-based simulation environment. Download the CLECS and CLECS 2.0, which are available in Chinese and Norwegian translated versions, which are also listed below.

CLECS and CLECS 2.0 Downloads:

- Clinical Learning Environment Comparison Survey (CLECS)

- CLECS Subscale Items

- Sample CLECS Practice Example

- Translations:

- CLECS Chinese Version (Currently Offline)

- CLECS Norwegian Version

- Clinical Learning Environment Comparison Survey 2.0 for Virtual (CLECS 2.0)

Why the Clinical Learning Environment Comparison Survey (CLECS) was Developed: In the mid 2000’s, discussions were occurring in earnest as to whether simulation could replace traditional clinical hours in the nursing curriculum. At the same time, community members were talking about the pros and cons of using simulation for high-stakes testing. The CLECS was developed as a way to evaluate how well undergraduate nursing students believed their learning needs were met in the traditional clinical environment and in the simulated clinical environment, where educators agreed that one needed to determine how similar or different the two learning environments were, before decisions could be made as to whether one could replace the other. This tool was used in the landmark NCSBN National Simulation Study. (Hayden et al., 2014).

Download the CLECS or see other language versions.

Sponsored Content:

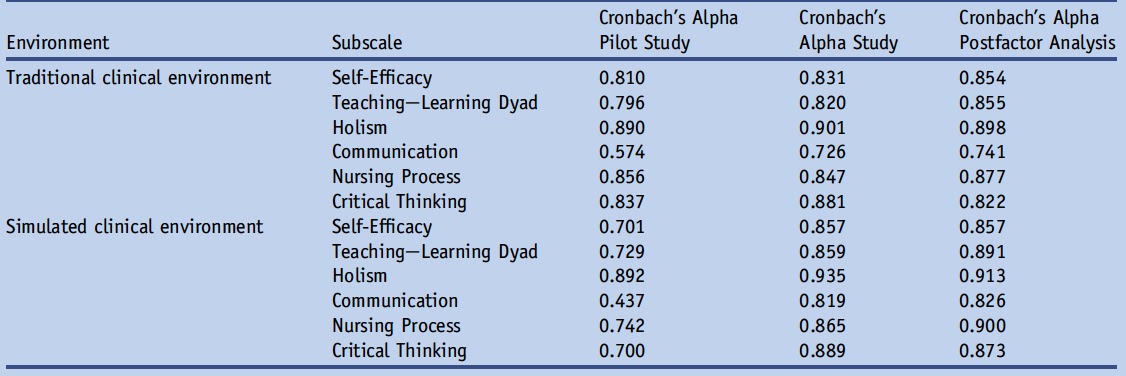

How the Clinical Learning Environment Comparison Survey (CLECS) was Developed: The CLECS was developed from topics identified in practice and in the simulation and nursing literature. The survey covered all aspects of clinical care, from the time a learner received their patient assignment through post-conference. A 12-member panel of experts (11 nursing faculty, 1 expert researcher) reviewed content and wording on the survey as well as its design. Subscales were defined by using an iterative process that included 5 undergraduate nursing faculty who taught in both clinical and simulation environments. The subscales underwent refinement during two pilot studies.

- Pilot study #1: 44 participants who provide feedback about instruction clarity, ambiguous wording, confusing, and difficult to answer questions, as well as a review of all study related materials, including informed consent. Initial subscale internal consistencies above .70 in all but one subscale.

- Pilot study #2: 22 participants to evaluate test-retest reliability and further assess internal consistency of subscales, which continued to rise.

SubScales:

-

- Self-efficacy (4 items)

- Teaching – Learning Dyad (5 items)

- Holism (6 items)

- Communication (4 items)

- Nursing Process (6 items)

- Critical Thinking (2 items)

Reliability and Validity of the CLECS

The study was conducted at three universities; two baccalaureate programs and one associate degree program in three regions of the United States.

Sponsored Content:

- Sample: 422 undergraduate nursing students who had provided care to at least one simulated patient and one human patient.

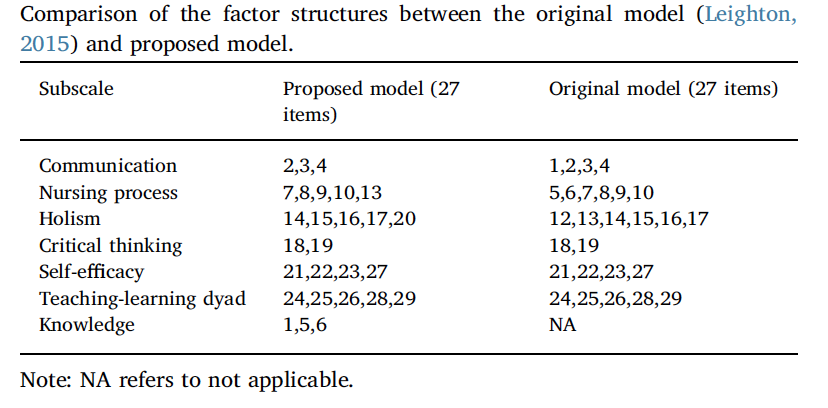

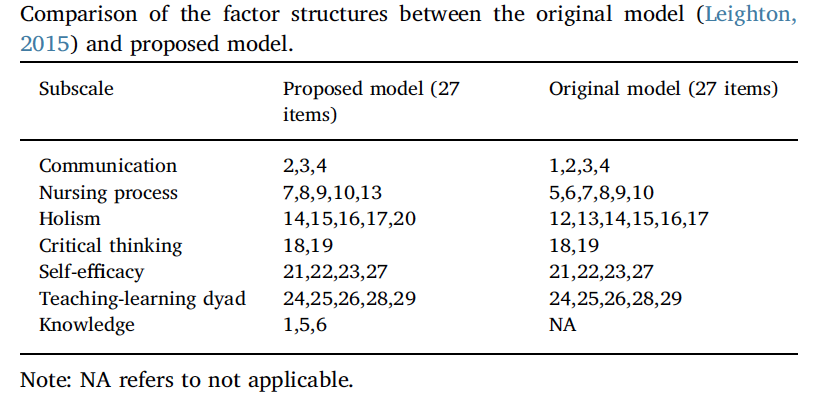

- Confirmatory factor analysis identified six subscales, with two items that did not align with subscales in the original CLECS study. These items were treated independently in the CLECS 2.0 study.

- The study team determined that the item “Evaluating the effects of medication administration to the patient” was an example of clinical reasoning. After consultation with Dr Patricia Benner, the Critical Thinking subscale was changed to Clinical Reasoning and that item was included in the Clinical Reasoning subscale.

- A second item “Thoroughly documenting patient care” was determined to be subsumed by the item “Communicating with interdisciplinary team” and was removed from the CLECS 2.0 following data analysis. These changes have also been reflected on the CLECS.

Using the CLECS

The CLECS can be used to evaluate facilitators and the curriculum. While tested with undergraduate nursing students, other disciplines are piloting the tool with their profession. Two items fell out during analysis–1) medication administration, and 2) documentation. It was learned that not all simulations attended by students during the study had a medication and/or documentation component. The pedagogy has evolved since this tool’s creation 10 years ago and most programs include both in all their simulated clinical experiences. It is recommended that this survey be completed one time during the course of the program, prior to practicum experiences. You could also decide to use the CLECS at the end of each semester or at the end of each academic year. If your program is new or struggling, more frequent use of the tool is recommended.

Evaluation: The CLECS is useful for evaluating the learner’s perception of how well their learning needs were met in the simulated clinical environment and the traditional clinical environment. Results can be used in three ways:

Evaluation of Items and Subscales for Improvement: The goal is to create simulated clinical experiences that are equivalent to the traditional clinical experience, especially if your program is substituting clinical with simulation. Identify specific items and subscales where improvement is needed in the simulation lab. Create changes that will enhance the fidelity to become more realistic.

Evaluation of the Facilitator: When evaluating the performance of the facilitator, it is important that they are meeting the learning needs of the participants. After data is collected on an annual, or semester basis, individual items, subscales, and the overall scores should be evaluated to determine the facilitator’s effectiveness. This can be done by each course facilitated and overall. Over time, results should be trended. Decisions can then be made as to whether the facilitator is performing to expectations, requires development or remediation.

Evaluation of the Simulation Operations Personnel: Similarly, when evaluating the performance of the simulation operations personnel, it is important that they are meeting the learning needs of the participants. At the end of each survey completion period, individual items, subscales, and the overall scores should be evaluated to determine the operations personnel’s effectiveness. This can be done by each course and overall. Over time, results should be trended. Decisions can then be made as to whether the operator is performing to expectations, requires development or remediation.

Scoring: There is no established method to score the CLECS. We suggest that you focus on the lowest scoring items and subscales first, and prioritize the most important changes that should occur, as well as the ones that can be made quickly and easily. Use these low scoring items as a needs assessment for creating a facilitator/simulation operations personnel development plan. An example is provided if you decide to consider use of the tool.

References: For additional detail regarding the development and psychometric analysis of the CLECS:

- Leighton, K. (2015, January). Development of the clinical learning environment comparison survey. Clinical Simulation in Nursing, 11(1), 44-51. http://dx.doi.org/10.1016/j.ecns.2014.11.002

- Hayden, J. K., Smiley, R. A., Alexander, M., Kardong-Edgren, S., & Jeffries, P. R. (2014). The NCSBN national simulation study: A longitudinal, randomized, controlled study replacing clinical hours with simulation in prelicensure nursing education. Journal of Nursing Regulation, 5(2), C1-S64. Retrieved from https://www.ncsbn.org/JNR_Simulation_Supplement.pdf.

Suggested citation: Leighton, K. (2018). Clinical Learning Environment Comparison Survey. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/clecs/.

Permission to Use: General use is already permitted by posting the statement: I understand that I have been granted permission by the creators of the requested evaluation instrument to use it for academic, clinical and/or research purposes. I agree that I will use the evaluation instrument only for its intended use, and will not alter it in any way. I will share findings as well as publication references with the instrument creator(s). I am allowed to place the evaluation instrument into electronic format for data collection. If official ‘Permission to Use’ letter is required, please contact the primary author. Include the purpose of the official request (research, grant), the intended use of the tool and with what population.

With Gratitude from Dr. Leighton: The CLECS was developed during Dr. Leighton’s dissertation work under the direction of Dr. Sheldon Stick, whom she wishes to thank and appreciate saying “His patient guidance and direction resulted in the creation of an instrument that has proven to be important to the field of simulation education. I couldn’t have done it without him! Thank you to my mentor and my friend–I am forever grateful to you!” .

CLECS 2.0 for Computer-Based Simulation

In the time of unprecedented change during to pandemic COVID-19, educators worldwide were forced to quickly move clinical simulation activities to a screen-based format. The original Clinical Learning Environment Comparison Survey, used in the landmark National Council of State Boards of Nursing simulation study, has been revised to include screen-based simulation! The CLECS 2.0 was used to learn pre-licensure nursing students’ perceptions of how well their learning needs are met in three environments: traditional clinical environment, face-to-face simulated clinical environment, and screen-based simulation environment.

Whether you have been teaching with screen-based simulation for years, or only for 2 weeks, you can use the CLECS 2.0 to learn if your clinical teaching methods are effectively helping your students to learn, while collecting data to support your decisions related to using screen-based simulation as part of your pandemic response. When using the CLECS 2.0, please substitute the headings with the clinical and simulation environments you are studying (e.g., clinical, virtual reality, manikin-based, etc)

Download the CLECS 2.0.

CLECS 2.0 Research Study Findings:

The research question was: How well do the students believe their learning needs were met in the traditional clinical environment, face-to-face simulation (F2FS) environment, and screen-based simulation (SBS) environment?

- Sample size was 174; however, many items were marked Not Applicable and the statistician advised analysis only on those results that were complete. The final sample size was 113. Participants were from the US, Japan, and Canada. About half were in baccalaureate programs, 36% in associate degree programs, 6% in diploma programs, and 3% in licensed practical nursing programs.

- Item scores were typically greatest for traditional clinical and lowest for SBS. In the paper, we compare item responses between the environments and also address the number of ‘not applicable’ responses in each environment.

- Traditional Clinical vs F2FS: differences in only two items, favoring traditional clinical

- Traditional Clinical vs SBS: all learning needs better met in traditional clinical

- F2FS vs SBS: differences in 10 of 29 items, favoring F2FS

The CLECS validity suggests that this instrument should be able to be used no matter the type of clinical learning environment; however, if items are marked NA, then we need to consider why – especially if SBS is allowed to replace traditional clinical and F2FS activities. Two items did not align with subscales in the original CLECS study and were treated independently in the CLECS 2.0 study. The study team determined that the item “Evaluating the effects of medication administration to the patient” was an example of clinical reasoning. After consultation with Dr Patricia Benner, the Critical Thinking subscale was changed to Clinical Reasoning and that item was included in the Clinical Reasoning subscale. A second item “Thoroughly documenting patient care” was determined to be subsumed by the item “Communicating with interdisciplinary team” and was removed from the CLECS 2.0 following data analysis. Data for this study was collected in the uncontrolled environment of the early pandemic. Unfortunately, the sample size was too low to establish reliability. We encourage further study of the CLECS 2.0.

References:

- Leighton, K., Kardong-Edgren, S., Schneidereith, T., Foisy-Doll, C., & Wuestney, K. (2021). Meeting undergraduate nursing students’ clinical needs: A comparison of traditional clinical, face-to-face simulation, and screen-based simulation learning environments. Nurse Educator, 46(6), 349-354. https://doi.org/10.1097/NNE.0000000000001064

- Website suggested citation: Leighton, K, Kardong-Edgren, S., Schneidereith, T., Foisy-Doll, C., & Wuestney, K. (2021). Clinical Learning Environment Comparison Survey 2.0. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/clinical-learning-environment-comparison-survey-clecs/

- Additional Permissions: If you need an official ‘Permission to Use’ letter or have questions about this version, contact Dr. Kim Leighton.

Permission to Use: General use is already permitted by posting the statement: I understand that I have been granted permission by the creators of the requested evaluation instrument to use it for academic, clinical and/or research purposes. I agree that I will use the evaluation instrument only for its intended use, and will not alter it in any way. I will share findings as well as publication references with the instrument creator(s). I am allowed to place the evaluation instrument into electronic format for data collection. If official ‘Permission to Use’ letter is required, please contact the primary author. Include the purpose of the official request (research, grant), the intended use of the tool and with what population.

CLECS and CLECS 2.0 Downloads (English Versions):

- Clinical Learning Environment Comparison Survey (CLECS)

- Clinical Learning Environment Comparison Survey 2.0 for Virtual (CLECS 2.0)

CLECS Chinese Version (Currently Offline)

How the CLECS (Chinese Version) was Translated by Yaohua Gu, RN, MSN: The CLECS was translated into Chinese based on the standardized guidelines including: forward translation, back translation, cultural adaptation, and pilot testing. The forward translation was independently performed by two bilingual senior nursing lecturers. These two translators combined their translations and ensured that the initial Chinese version of CLECS linguistically and culturally matched with the English version. Then, two other bilingual translators, blinded to the original CLECS, translated the initial Chinese version of CLECS back into English. By comparing the back translated CLECS with the original English version, the initial Chinese version of CLECS was further modified based on the consensus from these four translators. Then, one associate professor, one senior lecturer, one nursing postgraduate, and one English instructor majoring in linguistics were invited to evaluate each item and give their opinions on the semantic, idiomatic, experiential, conceptual, and cultural equivalency of the modified Chinese version of CLECS. When consensus was reached on all items, the CLECS (Chinese version) was finalized.

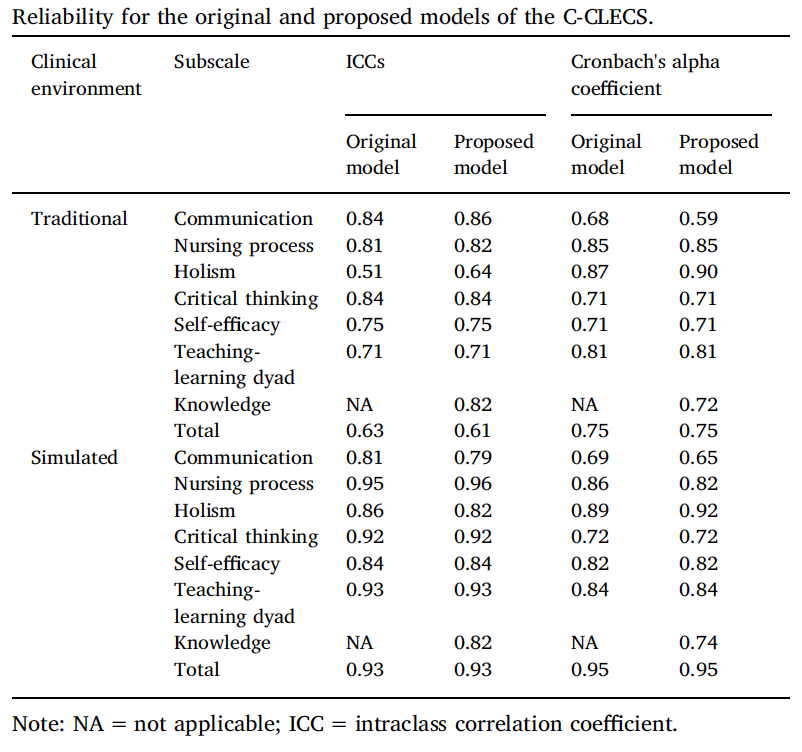

Reliability and Validity of the CLECS (Chinese Version):

- Settings: The study was conducted at two universities; two baccalaureate programs in two regions of China.

- Sample: 179 undergraduate nursing students who had provided care to at least one simulated patient and one human patient.

- Methods: An exploratory factor analysis was used to establish a modified factor structure of CLECS (Chinese version); a confirmatory factor analysis verified its construct validity. Reliability of the CLECS (Chinese version) was estimated using the intraclass correlation coefficients (ICCs) and Cronbach’s alpha coefficients.

- Results:

References:

- Gu, Y., Xiong, L., Bai, J., Hu, J., & Tan, X. (2018). Chinese version of the clinical learning environment comparison survey: Assessment of reliability and validity. Nurse Education Today, 71, 121-128.

- Suggested citation: Gu, Y., Xiong, L., Bai, J., Hu, J., & Tan, X. (2018). Clinical Learning Environment Comparison Survey – Chinese Version. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/clinical-learning-environment-comparison-survey-clecs/

- Additional Permissions: If you need an official ‘Permission to Use’ letter or have questions about this Chinese version, please contact Gu Yaohua.

Permission to Use: General use is already permitted by posting the statement: I understand that I have been granted permission by the creators of the requested evaluation instrument to use it for academic, clinical and/or research purposes. I agree that I will use the evaluation instrument only for its intended use, and will not alter it in any way. I will share findings as well as publication references with the instrument creator(s). I am allowed to place the evaluation instrument into electronic format for data collection. If official ‘Permission to Use’ letter is required, please contact the primary author. Include the purpose of the official request (research, grant), the intended use of the tool and with what population.

Download the CLECS Chinese Version here (Currently Offline).

CLECS Norwegian Version

How the CLECS Norwegian Version was Translated by Camilla Olaussen, RN, PhD, MNSc: The translation process included forward-translation, the use of an expert panel, back-translation, pre-testing and cognitive interviewing. The forward translation was made independently by two translators, both registered nurses and nursing teachers, familiar with the terminology of the area covered by the CLECS. An expert panel with five members (the two original translators and three experienced nursing teachers, all registered nurses) was established to resolve discrepancies and to reconcile the two forward-translations into a single forward translation. The back-translation was made by a professional translator and native speaker of English. Following back-translation, the back- translated version was sent to Dr. Kim Leighton together with the original CLECS to make sure that the two versions did not differ in conceptual meaning. The CLECS (Norwegian Version) was then pre-tested on the target population followed by a focus group interview where each item was thoroughly evaluated by the pre-test respondents. The final CLECS (Norwegian Version) was a result of all the iterations described above.

Reliability and Validity of the CLECS (Norwegian Version)

- Setting: A university college in Norway that provide bachelor education in nursing.

- Sample: 122 nursing students in their second semester of the education program.

- Methods: To verify internal construct validity, confirmatory factor analysis (CFA) was used to investigate whether the original six-factor model fitted our observed data. Internal consistency was assessed by Cronbach alphas, and test-retest reliability was assessed by the intraclass correlation coefficient (ICC).

- Results: CFA goodness-of-fit indices (χ2/df. = 1.409, CFI = .915, RMSEA = .058) showed acceptable model fit with the original six-factor model. Cronbach alphas for the CLECS (Norwegian Version) subscales ranged from .69 to .89. ICC ranged from .55 to .75, except for two subscales with values below .5

References:

- Olaussen, C., Jelsness- Jørgensen, L.P., Tvedt, C.R., Hofoss, D., Aase, I., Steindal, S.A. (2020). Psychometric properties of the Norwegian version of the clinical learning environment comparison survey. Nursing Open, 1-8. DOI: 10.1002/nop2.742

- Webpage suggested citation: Olaussen, C., Jelsness-Jørgensen, L. P., Tvedt, C. R., Hofoss, D., Aase, I., Steindal, S. A. (2020). Clinical Learning Environment Comparison Survey – Norwegian Version. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/clinical-learning-environment-comparison-survey-clecs/

- If you need an official ‘Permission to Use’ letter or have questions about this version, please contact Camilla Olaussen.

Permission to Use: General use is already permitted by posting the statement: I understand that I have been granted permission by the creators of the requested evaluation instrument to use it for academic, clinical and/or research purposes. I agree that I will use the evaluation instrument only for its intended use, and will not alter it in any way. I will share findings as well as publication references with the instrument creator(s). I am allowed to place the evaluation instrument into electronic format for data collection. If official ‘Permission to Use’ letter is required, please contact the primary author. Include the purpose of the official request (research, grant), the intended use of the tool and with what population.

Download the CLECS Norwegian Version.

—

Return to the Evaluating Healthcare Simulation tools webpage.

Sponsored Content: