Scaling Assessment in Healthcare Simulation: Enhancing Validity and Reliability

In healthcare education, the competency assessment of professionals via healthcare simulation presents a sophisticated challenge, particularly when scaling these evaluations. The necessity to ensure that assessments are fair, dependable, and valid across various levels, domains, and populations has led to the exploration of advanced scaling techniques. This HealthySimulation.com article by Melissa Tully, BSN, MHPE, RN-BC, delves into scaling assessment in simulation-based competency assessment, discussing the importance, challenges, and various methodologies employed to achieve scalable evaluations.

Scaling assessment refers to the adjustment of evaluation metrics and methodologies to ensure consistency, fairness, and accuracy across different assessment contexts. In simulation-based competency assessment, scaling is crucial for several reasons:

- Comparability allows for the comparison of performance outcomes across different clinical simulation scenarios, participant levels (e.g., novice to expert), and even across institutions. This is key for workforce development.

- Standardization: Scaling ensures that assessments adhere to standardized benchmarks and helps the alignment of competency evaluations with educational goals and accreditation standards.

- Adaptability: Effective scaling supports the adaptation of healthcare simulation scenarios for diverse educational needs and educational outcomes, which enhances the flexibility and utility of healthcare simulation as an educational tool.

Types of Scaling Linear vs. Nonlinear Scaling

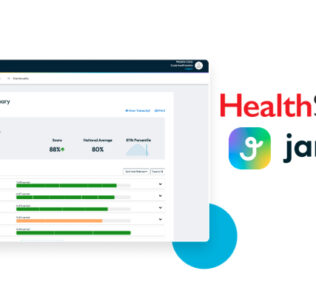

Sponsored Content:

Linear Scaling involves a direct proportionality in the scaling process, where adjustments are made uniformly across all levels of assessment difficulty or complexity. This approach is straightforward but may not always accurately reflect the nuances of skill progression in healthcare competencies.

Nonlinear Scaling acknowledges that competency development may not constantly progress across different domains or levels of ability. The application of more sophisticated mathematical models can adjust for these variances. For example, an individual may not be as competent in communication skills as they are in their clinical rationalization skills.

Vertical vs. Horizontal Scaling

Vertical Scaling is used to compare assessments across different levels of expertise or stages of education, which focus on the progression of skills and knowledge over time. This approach is integral to longitudinal studies of competency development.

Sponsored Content:

Horizontal Scaling compares assessments across the same ability level but in different domains or specializations, which ensures that evaluations are fair across diverse areas of healthcare practice. These are more often used as global professional competencies.

View the LEARN CE/CME Platform Webinar How to Conduct Effective Research on Healthcare Simulation-Based Educational Interventions to learn more!

Advanced Techniques and Strategies for Scaling Assessment Item Response Theory (IRT) and Rasch Model

IRT and the Rasch model are sophisticated statistical methods used for scaling assessment. They provide a framework for how to understand individual test items related to the latent traits or abilities that are measured, such as clinical judgment or technical skill ability. These models offer insights into item difficulty and participant ability, which enhances the precision of competency assessments.

Standard Setting, Equating, and Linking

Standard Setting involves demonstration of the minimum performance levels required for different competency stages or certification criteria. This ensures that the pass criteria reflect the requisite levels of professional competency, which is crucial. Equating is a statistical process to ensure that scores from different assessment forms or simulations are comparable, which addresses variations in difficulty and participant groups. Linking refers to the techniques used to compare scores across different assessments or over time. This helps longitudinal studies of competency development and scale assessments across different contexts.

Enhancing Validity, Reliability, and Comparability

The application of scaling techniques in simulation-based assessments addresses several key challenges:

- Validity: Scaling ensures that assessments accurately measure the competencies they intend to across various scenarios and levels of complexity.

- Reliability: Through standardization and advanced statistical methods, scaling enhances the consistency of assessment outcomes. Reliability makes them more reliable indicators of true competency.

- Comparability: Scaling enables the fair comparison of assessment results within the same program and across different institutions and healthcare environments.

Examples and Evidence From Research

Research has shown the effectiveness of scaling assessment in healthcare simulation-based education. For example, studies that use the Rasch model have highlighted the ability to show items that do not perform as expected, which leads to improvements in assessment tools. Similarly, the implementation of standard-setting techniques has enhanced the alignment of healthcare simulation assessments with clinical competency standards. This ensures that healthcare professionals are adequately prepared for real-world challenges.

Scaling assessment in simulation-based competency evaluations is a complex but essential endeavor to ensure that assessments are valid, dependable, and comparable across various contexts. By the employment of advanced scaling techniques and strategies, educators and evaluators can enhance the educational impact of simulation, which ultimately contributes to the development of a competent and adaptable healthcare workforce.

Why Evaluate Clinical Simulation Experiences?

Evaluating healthcare simulation is crucial to ensure that educators can demonstrate ROI for both learning and program outcomes. Healthcare simulation has proved to be a highly effective education and training tool across all medical fields. Due to an increased push toward patient safety, many facilities and institutions have been led to revise their medical education systems by thoroughly evaluating healthcare simulation education in medicine.

INACSL’s Healthcare Simulation Standards of Best Practice: Evaluation of Learning and Performance. The Standard states that simulation-based experiences should be evaluated with a valid and reliable evaluation tool with evaluators who have been trained in the use of the tool. To meet the Evaluation Standard, specific criteria should be obtained for each simulation-based experience (SBE):

- Determine the method of learner evaluation before the SBE

- SBEs may be selected for formative evaluation

- SBEs may be selected for summative evaluation

- SBEs may be selected for high-stakes evaluation

Learn More About Evaluating Healthcare Simulation!

References

- Assessment Systems (2023): What is scaled scoring on a test?

- Assessment Systems (2023): What is test scaling?

- Assessment Systems (2023): What is vertical scaling in assessment?

- Cox, J. (2022). Understanding scaled scores on standardized tests. ThoughtCo.

- INACSL Standards Committee, McMahon, E., Jimenez, F.A., Lawrence, K. & Victor, J. (2021, September). Healthcare Simulation Standards of Best Practice Evaluation of Learning and Performance. Clinical Simulation in Nursing, 58, 54-56.

- Pointerpro. (2018). How to create your own assessment: the ultimate guide.

- Public Health Foundation. (2021). Competency assessment – 2021.

Melissa Jo Tully, BSN, MHPE, RN-BC, is a highly accomplished healthcare professional passionate about education and patient safety. With a Master’s degree in Health Professional Education from the College of Medicine at Vanderbilt University, Melissa has honed her expertise in developing innovative programs to enhance healthcare performance and quality. As an experienced nurse, Melissa followed her passion for lifelong learning by exploring nursing specialties through her early nursing career as a float nurse, including working in critical care, emergency room, labor and delivery, and hospice. Melissa brings a wealth of knowledge and experience to her role. Since 2009, she has been dedicated to simulation-based education programs, both brick-and-mortar and virtual. Driven by a deep-rooted desire to enhance patient safety and quality of care, Melissa continuously seeks opportunities to push the boundaries of simulation-based education. Through her expertise and leadership, she inspires and empowers healthcare professionals to strive for continuous improvement and positively impact patients’ lives. Melissa Tully is a trailblazer in healthcare simulation education, and her dedication to advancing the field is evident in her contributions and achievements.

Sponsored Content: