Simulation Effectiveness Tool – Modified (SET-M)

As part of the Evaluating Healthcare Simulation tools, the Simulation Effectiveness Tool – Modified (SET-M) was revised and modified from the Simulation Effectiveness Tool (2005) by CAE Healthcare (formerly known as METI), as part of the Program of Nursing Curriculum Integration. The SET-M is designed for evaluation of clinical simulation scenarios. Leighton, Ravert, Mudra, and Macintosh (2015) updated the SET to incorporate simulation standards of best practices and updated terminology. The researchers determined the tool required updating to capture the desired outcomes – learner’s perceptions of how well their learning needs in the simulation environment were being met. SET-M is available in Turkish and Spanish versions below.

Downloadable Files for SET-M:

- The Simulation Effectiveness Tool – Modified

- The Simulation Effectiveness Tool – Modified with Subscales

- Sample SET-M Practice. Practice using this with your team to identify areas in need of improvement and create an improvement plan, then prioritize it! Consider how you might use these results to provide coaching or conduct an evaluation session with a simulationist.

- SET-M (Spanish Version)

- SET-M (Turkish Version)

Permission to Use FREELY: General use is already permitted by posting the statement: I understand that I have been granted permission by the creators of the requested evaluation instrument to use it for academic, clinical and/or research purposes. I agree that I will use the evaluation instrument only for its intended use, and will not alter it in any way. I will share findings as well as publication references with the instrument creator(s). I am allowed to place the evaluation instrument into electronic format for data collection. If official ‘Permission to Use’ letter is required, please contact the primary author. Include the purpose of the official request (research, grant), the intended use of the tool and with what population.

Update Note for Using Set-M for Virtual Simulation: As virtual simulation is a type of simulation, one can reasonably expect that simulation evaluation instruments can be used to measure the same concepts in that environment. Instead of “verbalizing” the SET-M revision to “Communicating” will better allow for learner responses whether the debriefing occurs synchronously immediately following virtual experiences, asynchronously at an established time following one or more experiences, or if debriefing is a written reflection done according to a set of questions.

Sponsored Content:

How the Simulation Effectiveness Tool – Modified (SET-M) was Developed: These reports, standards, and guidelines were used to modify language in the original SET:

- INACSL Standards of Best Practice: SimulationSM, (INACSL Standards Committe, 2013, 2016)

- Quality and Safety Education for Nurses (QSEN) competencies (Cronewett et al., 2007)

- Essentials of Baccalaureate Education for Professional Nursing Practice (American Association of Colleges of Nursing, 2008)

The goal was to update this survey’s statements to include active verbs, current terminology, and address missing or under-weighted topics. For example, when the original tool was designed, many simulationists were prebriefing, but it was not yet the standard. In addition, we have grown in our understanding of the importance of debriefing; therefore, expanded that section. The SET-M grew to 19 items, scored on a 3-point Likert scale. Detail about specific item changes can be found in the manuscript referenced below.

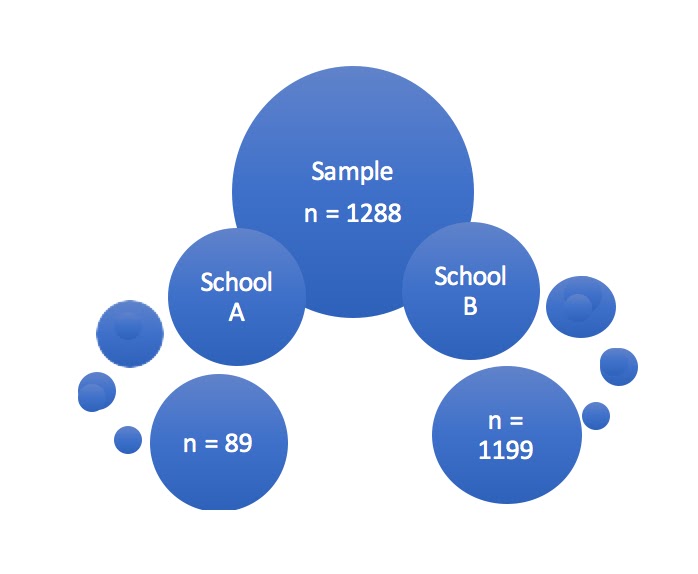

Reliability and Validity of the SET-M for Nursing: The study was conducted at two universities, in a total of 13 sites.

Sample: 1288 undergraduate nursing students in the medical-surgical semester participated in the study. Sample for Analysis:

Sponsored Content:

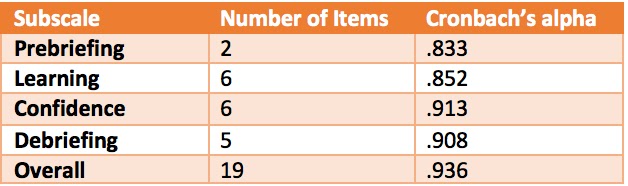

Subscale Reliability:

Factor analysis increased the number of subscales to four:

1. Prebriefing

2. Learning (original scale)

3. Confidence (original scale)

4. Debriefing

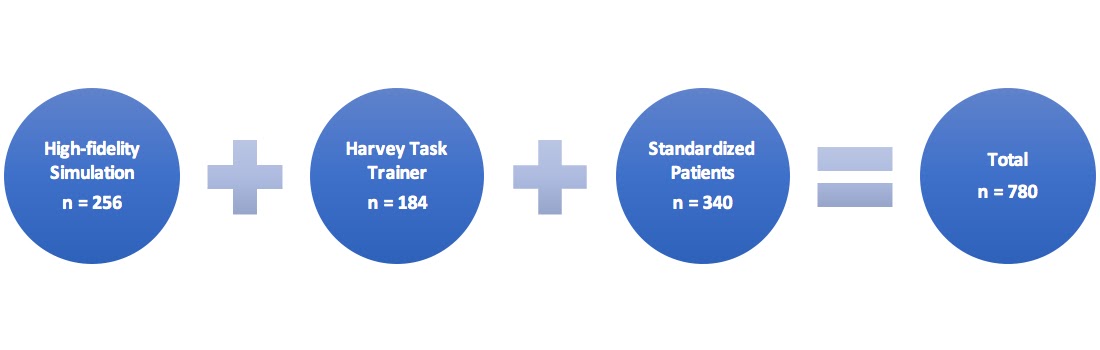

Reliability and Validity of the SET-M for Medicine: The study was conducted at one medical school. Third and fourth semester medical students participated in the study. Each participant completed an already scheduled simulation experience using one of three methods: high-fidelity simulation, Harvey task trainer, or standardized patient. The factor analysis for this study found one single scale, with no subscales. Of note, data was not collected for the original prebriefing or debriefing sections at the decision of the school’s faculty.

Reliability of SET-M in Neonatal Resuscitation:

- Palmer, E., Labant, A. L., Edwards, T. F., & Boothby, J. (2019). A collaborative partnership for improving newborn safety: Using simulation for neonatal resuscitation training.The Journal of Continuing Education: Thorofare, 50 (7), 319-324. Doi: 10.3928/00220124-20190612-07

Using the SET-M: The SET-M is designed for evaluation of simulation scenarios. The tool has been tested in the nursing and medicine educational environments and with high-fidelity mannequins, Harvey simulator, and standardized patients. The concepts are broad; feedback has indicated that the tool is appropriate for the clinical simulation learning environments as well.

The SET-M can be administered following every simulated clinical experience or selected ones. Many schools have learners complete the SET-M after every scenario in every course. This allows for early identification of concerns or trends in the data; however, it can lead to survey fatigue among participants causing them to just mark every response the same, regardless of their real thoughts–they just want to get it over with!

Consider the stability of your learners and your facilitators. If the same facilitator has used the same scenario for more than two semesters, and the SET-M scores are acceptable–is it important to continue to collect the data? On the other hand, scores may have been acceptable for two semesters and then the facilitator changes–you should consider going back to data collection to facilitate early identification of concerns. Regardless of your decision, ensure it is consistent and defensible for accreditation and institutional effectiveness purposes.

Evaluation: The SET-M is useful for evaluating the learner’s perception of how effective the simulation was toward meeting their learning needs. Results can be used in three ways:

- Design and implementation of the simulated clinical experience: are there areas that can or should be improved?

- What percentage of the learners are marking the statement lower–if one person marks it low but the rest mark it high, then the impetus for change is lessened. However, if over 25% of the participants mark a statement low, then facilitators must reflect on the experience and make changes.

Evaluation of the Facilitator: When evaluating the performance of the facilitator, it is important that they are meeting the learning needs of the participants. At the end of each semester, individual items, subscales, and the overall scores should be evaluated to determine the facilitator’s effectiveness. This can be done by each course facilitated and overall. Over time, results should be trended. Decisions can then be made as to whether the facilitator is performing to expectations, requires development or remediation.

Evaluation of the Simulation Operations Personnel: Similarly, when evaluating the performance of the simulation operations personnel, it is important that they are meeting the learning needs of the participants. At the end of each semester, individual items, subscales, and the overall scores should be evaluated to determine the operations personnel’s effectiveness. This can be done by each course and overall semester. Over time, results should be trended. Decisions can then be made as to whether the operator is performing to expectations, requires development or remediation.

Scoring: There is no established method to score the SET-M. We suggest that you focus on the lowest scoring items and subscales first, and prioritize the most important changes that should occur, as well as the ones that can be made quickly and easily. Use these low scoring items as a needs assessment for creating a facilitator/simulation operations personnel development plan. An example is provided if you decide to consider use of the tool.

Downloads for the English version of the Simulation Effectiveness Tool – Modified (SET-M):

- The Simulation Effectiveness Tool – Modified

- The Simulation Effectiveness Tool – Modified with Subscales

- Sample SET-M Practice. Practice using this with your team to identify areas in need of improvement and create an improvement plan, then prioritize it! Consider how you might use these results to provide coaching or conduct an evaluation session with a simulationist.

References:

For additional detail regarding the development and psychometric analysis of the SET-M:

- Leighton, K., Ravert, P., Mudra, V., & Macintosh, C. (2015). Updating the Simulation Effectiveness Tool: Item modifications and reevaluation of psychometric properties. Nursing Education Perspectives, 36(5), 317-323. doi: 10.5480/1 5-1671

- Manuscripts for the psychometric analysis of the SET-M in medical education are under development.

- American Association of Colleges of Nursing. (2008). The essentials of baccalaureate education for professional nursing practice. Retrieved from www.aacn.nche.edu/education-resources/essential-series

- Cronenwett, L., Sherwood, G., Barnsteiner, J., Disch, J., Johnson, J., Mitchell, P., . . . Warren, J. (2007). Quality and safety education for nurses. Nursing Outlook, 55(3), 122-131. doi:10.1016/j.outlook.2007.02.006

- Elfrink Cordi, V. L., Leighton, K., Ryan-Wenger, N., Doyle, T. J., & Ravert, P. (2012). History and development of the Simulation Effectiveness Tool (SET). Clinical Simulation in Nursing, 8(6), e199-e210. doi:10.1016/j.ecns.2011.12.001

- INACSL Standards Committee. (2016). INACSL Standards of Best Practice: SimulationSM. Clinical Simulation in Nursing, 12(Suppl), S1-S50.

- Suggested Webpage Citation: Leighton, K, Ravert, P., Mudra, V., & Macintosh, C. (2018). Simulation Effectiveness Tool – Modified. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/simulation-effectiveness-tool-modified-set-m/

- If you need an official ‘Permission to Use’ letter, or have questions about this tool, please contact Dr. Kim Leighton.

SET-M (Spanish Version)

How the SET-M (Spanish Version) was translated: A Spanish translation of the Simulation Effectiveness Tool – Modified (SET-M) was performed by Olvera-Cortés, H. E., Fernando, D., Argueta-Muñoz, Hershberger, Arena del, Silvia, L., Hernández-Gutiérrez, Guitiérrez-Barreto, and S. E. (2022) using the RAND corporation guidelines for this purpose, performing the translation and the reverse translation. This process was similar to the one used for the Turkish translation.

Download the Spanish Version of the SET-M.

Method: The data were collected from seventh semester students who participated in five different telesimulation-based learning experiences in the Medicine School of the National Autonomous University of Mexico. Descriptive and inferential analysis were performed to know the psychometric characteristics of the instrument. For this purpose, a discrimination analysis was made of each one of the dimensions using the Student’s t-test; Chonbach alpha was used to determine the internal consistency of the test, and to identify the internal structure of the test an exploratory factorial analysis was used.

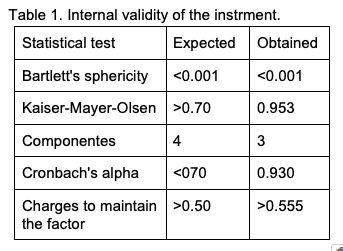

Results: A non probabilistic sample of 2,479 students were analyzed. From the total population, 68% were women and 32% were man. The internal validity of the instrument is shown in Table 1, the principal components were obtained with Varimax rotation. This components were determine as Prebriefing, Learning and Confidence; and Debriefing.

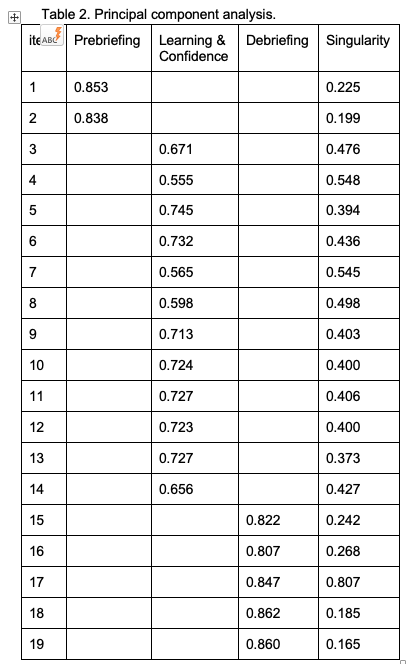

Validity Analysis of the SET-M (Spanish Version): The measurement tool consisted of a 3-factor construct, and this construct explained 64% of the total variance of the measurement tool. The 3 subscales that occurred as a result of the factor analysis were identified as first subscale being prebriefing, second – learning and confidence, and third – debriefing. In Table 2 it is shown the principal component analysis. There were; 1 and 2 items in the first subscale, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, and 14 items in the second subscale, and 15, 16, 17, 18, and 19 items in the third subscale.

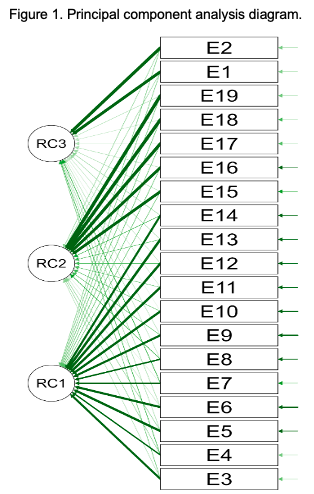

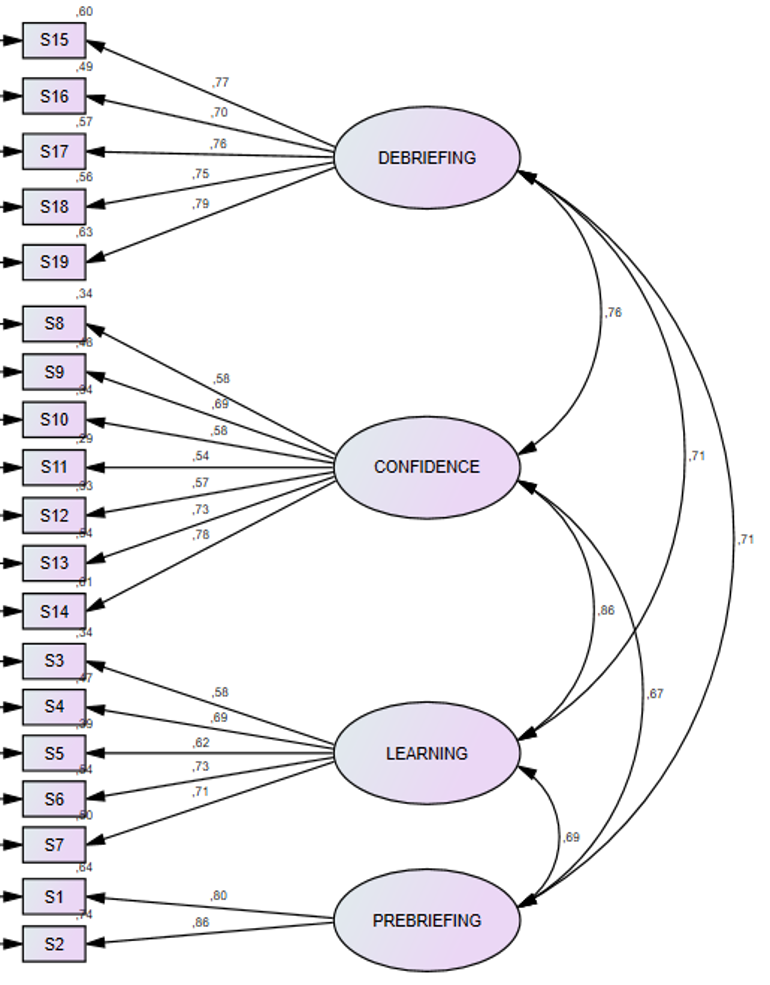

Flow diagram regarding factor loading between relevant items and factors (subscales) obtained after factor analysis is shown in Figure 1.

References:

- Olvera-Cortés, H. E., Fernando, D., Argueta-Muñoz, Hershberger, Arena del, Silvia, L., Hernández-Gutiérrez, Guitiérrez-Barreto, S. E. (2022). Evidencias de validez de la versión en español del Simulation Effectiveness Tool – Modified (SET-M) aplicado en telesimulación (Validity evidence of the Spanish version of the Simulation Effectiveness Tool – Modified (SET-M) applied in telesimulation). Educación Médica, 23(2). https://doi.org/10.1016/j.edumed.2022.100730

- Suggested Webpage Citation: Olvera Cortés, H. E., et al. (2022). Translation of the Simulation Effectiveness Tool – Modified to Spanish. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/simulation-effectiveness-tool-modified-set-m/

- If you need an official ‘Permission to Use’ letter, or have questions about this tool, please contact Dr. Hugo Olvera.

Download the Spanish Version of the SET-M.

SET-M (Turkish Version)

How the SET-M (Turkish Version) was translated: The Simulation Effectiveness Tool – Modified (SET-M) was translated into Turkish based on the standardized guidelines including forward translation, back translation, cultural adaptation, and pilot testing.

Download the Simulation Effectiveness Tool – Modified (Turkish Version)

Language Validity of the Tool: For the language validity of the SET-M, a measurement tool was translated from English to Turkish by researchers and two translation experts who had good knowledge of both the languages. Reverse translation was done by two different translation experts who had good knowledge of both the languages. Later, along with the researchers and translation experts, the Turkish and English items were reviewed, and final editing was conducted.

Content Validity of the Tool: SET-M in Turkish, with translation and reverse translations completed, was presented to 10 experts who were working on clinical simulation in nursing for content validity. In the analysis of experts’ opinions, content validity index was used, in which the criteria were as follows: 1 – not relevant, 2 – somewhat relevant, 3 – quite relevant, 4 – highly relevant. According to experts’ opinions, the content validity index of the items was 0.95. The Turkish form of the measurement tool was revised following the experts’ opinions, and a pilot study was executed with 10 nursing students who were not included in the sample size. The measurement tool was not edited after the pilot study.

Reliability and Validity of the SET-M (Turkish Version)

Method: The data were collected from 235 students who participated in the simulation-based learning experience in the Faculty of Nursing of two public universities in Istanbul between January and June 2019. In the data analysis, descriptive statistics, exploratory factor analysis with varimax rotation, confirmatory factor analysis, item-total correlation, test-retest correlation, interclass correlation, Pearson correlation, Cronbach’s alpha coefficient, and ceiling-floor effect analysis were conducted.

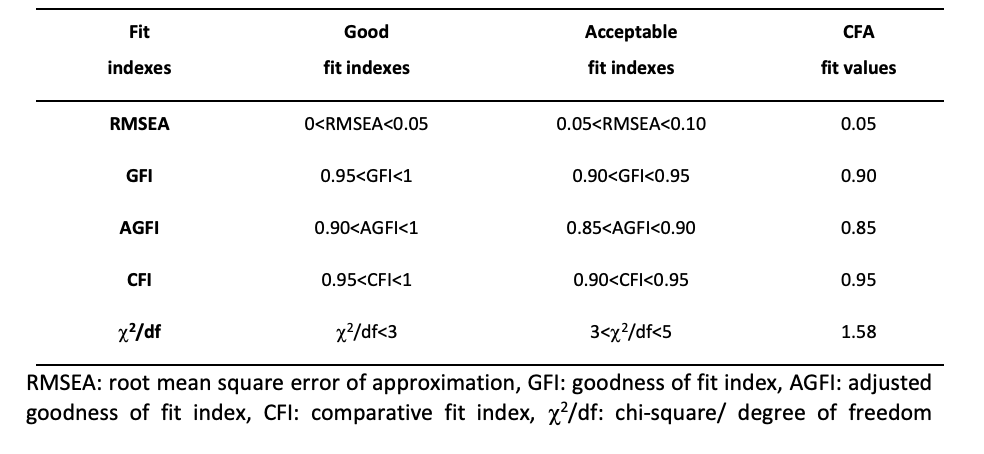

Validity Analysis of the SET-M (Turkish Version): EFA results, which are conducted to determine the subscales of the measurement tools, showed that the measurement tool consisted of 4-factor construct, and this construct explained 62.2% of the total variance of the measurement tool. It was determined that EFA of this study explained the biggest part of the total variance with the first factor explaining 21.03%, the second factor 15.97%, the third factor 14.59%, and the fourth factor 10.60%.

The 4 subscales that occurred as a result of the factor analysis were identified as first subscale being prebriefing, second – learning, third – confidence, and fourth – debriefing. There were:

- 1 and 2 items in the first subscale,

- 3, 4, 5, 6, and 7 items in the second subscale,

- 8, 9, 10, 11, 12, 13, and 14 items in the third subscale, and

- 15, 16, 17, 18, and 19 items in the fourth subscale.

Table 2. Fit indexes calculated as a result of confirmatory factor analysis of the measurement:

Flow diagram regarding factor loading between relevant items and factors (subscales) obtained after factor analysis is shown in Figure 1:

Reliability Analysis of the SET-M (Turkish Version)

- Authors identified that the item-total correlation of the Turkish version of the SET-M ranged between r=0.47 and r=0.69.

- Cronbach’s alpha internal consistency coefficient of the subscales was examined, the coefficients were detected as 0.81 for prebriefing, 0.80 for learning, 0.83 for confidence, and 0.86 for debriefing.

- Stability reliability of the Turkish version of the SET-M was evaluated with the test-retest correlation and interclass correlation. It was determined that the test-retest correlations were positive, strong, and statistically significant for the total measurement tool (r=0.92) and the subscales (r=0.84–0.90; p<0.001). Interclass correlations were identified as 0.92 for total measurement tool, 0.90 for prebriefing subscale, 0.85 for learning subscale, 0.89 for confidence subscale, and 0.84 for debriefing subscale (p<0.001).

References:

- Sahin, G., Buzlu, S., Kuguoglu, S., & Yilmaz, S. (2020). Reliability and validity of the Turkish Version of the Simulation Effectiveness Tool – Modified. Florence Nightingale Journal of Nursing, 28(3), 250-257. doi: 10.5152/FNJN.2020.19157.

- Webpage suggested citation: Sahin, G., Buzlu, S., Kuguoglu, S., & Yilmaz, S. (2020). Translation of the Simulation Effectiveness Tool – Modified to Turkish. Retrieved from https://www.healthysimulation.com/tools/evaluating-healthcare-simulation/simulation-effectiveness-tool-modified-set-m/

- If you need an official ‘Permission to Use’ letter, or have questions about this tool, please contact Gizem Sahin.

Download the Simulation Effectiveness Tool – Modified (Turkish Version)

—

Return to the Evaluating Healthcare Simulation tools webpage.

Sponsored Content: