Evaluating Healthcare Simulation: Helpful Resources for Clinical Educators

Evaluating healthcare simulation is a crucial step in ensuring that educators can demonstrate ROI for both learning and program outcomes. Healthcare simulation has proved to be an extremely effective education and training tool across the field of medicine. Due to an increased push toward patient safety many facilities and institutions have been led to revise their medical education systems by thoroughly evaluating healthcare simulations education in medicine. To ensure that these facilitators are best equipped to evaluate the status of their clinical simulation education efforts, the International Nursing Association for Clinical Simulation and Learning (INACSL), Harvard University’s Center for Medical Simulation (CMS), and the “Evaluating Healthcare Simulation” toolsets have independently extended resources to provide guidance during these clinical simulation evaluations.

A repository of instruments used in healthcare simulation research is listed by the INACSL research committee on the organization’s website to assist simulationists around the world. Resources are broken into categorized citations that include areas of practice such as skill performance, learner satisfaction, knowledge / learning, critical thinking / clinical judgment, self-confidence / self-efficacy, debriefing, video training tools, facilitator competence and organization-level evaluation.

Debriefing Assessment for Simulation in Healthcare (DASH): CMS offers DASH as a healthcare simulation resource. The DASH evaluates the strategies and techniques used to conduct healthcare simulation debriefings by examining concrete behaviors. The tool is based on evidence and theory about how people learn and change in experiential contexts. The DASH is designed to allow for the assessment of simulation debriefings from a variety of disciplines and courses; varying numbers of participants; a wide range of educational objectives; and various physical and time constraints.

Sponsored Content:

Evaluating Healthcare Simulation: *Update* 2024: The Evaluating Healthcare Simulation tools have now migrated to HealthySimulation.com! These tools were established or organized together by renowned clinical simulation expert Kim Leighton, PhD, RN, CHSOS, CHSE, ANEF, FAAN, as a way for healthcare simulation educators and researchers to assess different aspects of their clinical simulation-based education. The tools are intended as a means to help clinical educators gain satisfaction and confidence when evaluating SBE as pedagogy, and to establish reliability and validity when evaluating outcomes. Within the “Evaluating Healthcare Simulation” toolset now exclusively held on HealthySimulation.com, a number of evaluation instruments are presented to help clinical simulation professionals evaluate their own education and clinical environments. Freely available, these instruments provide a comprehensive healthcare simulation program evaluation.

Facilitator Competency Rubric: This tool presented as part of Leighton’s organized “Evaluating Healthcare Simulation” toolset arose from a need to evaluate whether a program was effective, as well as to observe any behavior and practice changes. To examine the overall abilities of a facilitator, rather than selected parts (such as debriefing), five major constructs were identified, and subcomponents to define each construct were selected. The five major constructs include preparation, prebriefing, facilitation, debriefing and evaluation. Now in final form, the FCR is designed for evaluation of healthcare simulation facilitators. While tested in the educational environment, the concepts are broad; feedback has indicated that the tool is appropriate for both academic and clinical simulation learning environments.

Simulation Effectiveness Tool – Modified (SET-M): The SET-M is another resource designed for evaluation of healthcare simulation scenarios. The tool has been tested in the nursing and medicine educational environments and with high-fidelity manikins, Harvey simulator, and standardized patients. The concepts are broad, as feedback has indicated that the tool is appropriate for the clinical simulation learning environments as well.

The SET-M can be administered following every clinical simulated experience, or on only selected ones. The SET-M is useful for evaluating the learner’s perception of how effective the clinical simulation was toward meeting their learning needs. Results can be used in three ways: for facilitator evaluation, evaluation of clinical simulation operations personnel, or for scoring. Many schools have learners complete the SET-M after every healthcare simulation scenario in every course. This allows for early identification of concerns or trends in the data. However, “Evaluating Healthcare Simulation” warns that constant use can lead to survey fatigue among participants, causing them to mark every response the same, regardless of their real thoughts.

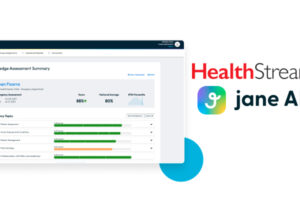

Sponsored Content:

Simulation Organizational Readiness Survey (SCORS): SCORS is another tool that was developed to assist administrators to evaluate institutional and program readiness for healthcare simulation integration. The tool also assists organizational leadership in better understanding the necessary components to address before purchasing clinical simulation equipment. The goal is to increase effective and efficient integration of healthcare simulation into the academic or organizational educational curriculum.

Clinical Learning Environment Comparison Survey (CLECS): This healthcare simulation resource can be used to evaluate facilitators and curricula. Now updated to CLECS 2.0, the tool is used to learn pre-licensure nursing students’ perceptions of how well their learning needs are met in three environments: a traditional clinical environment, a face-to-face simulated clinical environment, and a screen-based simulation environment. Therefore, educators can use CLECS 2.0 to determine if their teaching methods are effectively helping their learners retain information, while collecting data to support decisions related to using screen-based clinical simulation as part of their pandemic response.

ISBAR Interprofessional Communication Rubric (IICR): This is a tool designed to decrease the difficulty some nursing students have during phone communications with physicians in healthcare simulation-based education. This instrument was developed based on two nurse faculty members’ experience in the field, and informed by the literature. The IICR helps guide learners in terms of best practices of communication and enables educators to measure their level of communication, performed for purposes of debriefing and instruction. Twelve health care providers (including nurses and physicians) participated in the content validation of this instrument.

Actions, Communication, & Teaching in Simulation Tool (ACTS): The ACTS measures the performance of confederates participating in healthcare simulation. The ACTS tool was developed using a process of face and construct validity, surveying experts and psychometric evaluations through piloting. Five topic points are scored on a 0 (inadequate) to 6 (outstanding) scale, with a possible total scale score range of 0 to 30. The information gained from use of the ACTS tool can be used to individually evaluate confederates, as well as to identify development needs and prioritize confederate developmental activities.

Learn More About Evaluating Clinical Simulations in Medical Education

Lance Baily, BA, EMT-B, is the Founder & CEO of HealthySimulation.com, which he started while serving as the Director of the Nevada System of Higher Education’s Clinical Simulation Center of Las Vegas back in 2010. Lance is also the Founder and acting Advisor to the Board of SimGHOSTS.org, the world’s only non-profit organization dedicated to supporting professionals operating healthcare simulation technologies. His co-edited Book: “Comprehensive Healthcare Simulation: Operations, Technology, and Innovative Practice” is cited as a key source for professional certification in the industry. Lance’s background also includes serving as a Simulation Technology Specialist for the LA Community College District, EMS fire fighting, Hollywood movie production, rescue diving, and global travel. He and his wife Abigail Baily, PhD live in Las Vegas, Nevada with their two amazing daughters.

Sponsored Content: